Episteca generates training content from your documents—and only your documents. If it can't cite the source, it won't make the claim. Finally, an AI that compliance teams can trust.

Built for teams where a wrong answer costs more than a slow one.

Most AI will answer whether it knows or not.

Ask a general-purpose AI about your internal policies and it will give you something—confident, fluent, and possibly wrong. In compliance training, that's not a minor inconvenience. It's a liability.

You can't deploy training that might contain hallucinated procedures, invented regulations, or subtly incorrect guidance. But you also can't spend six months building courses manually while your policies sit unread in SharePoint.

Bounded generation.

Unlimited

trust.

Episteca is built on a simple principle: the system only says what it can prove.

Upload your source documents. The AI generates training content by retrieving relevant passages and constructing outputs grounded in that material. Every claim maps back to a specific source. If the retrieval confidence is low, the system flags the gap instead of guessing.

What you get: Training that's fast to produce and safe to deploy. What you also get: a clear map of where your documentation has gaps.

The Episteca Difference

It Won't Guess

Most AI systems optimize for sounding helpful. Episteca optimizes for being right. Low-confidence outputs get flagged, not published.

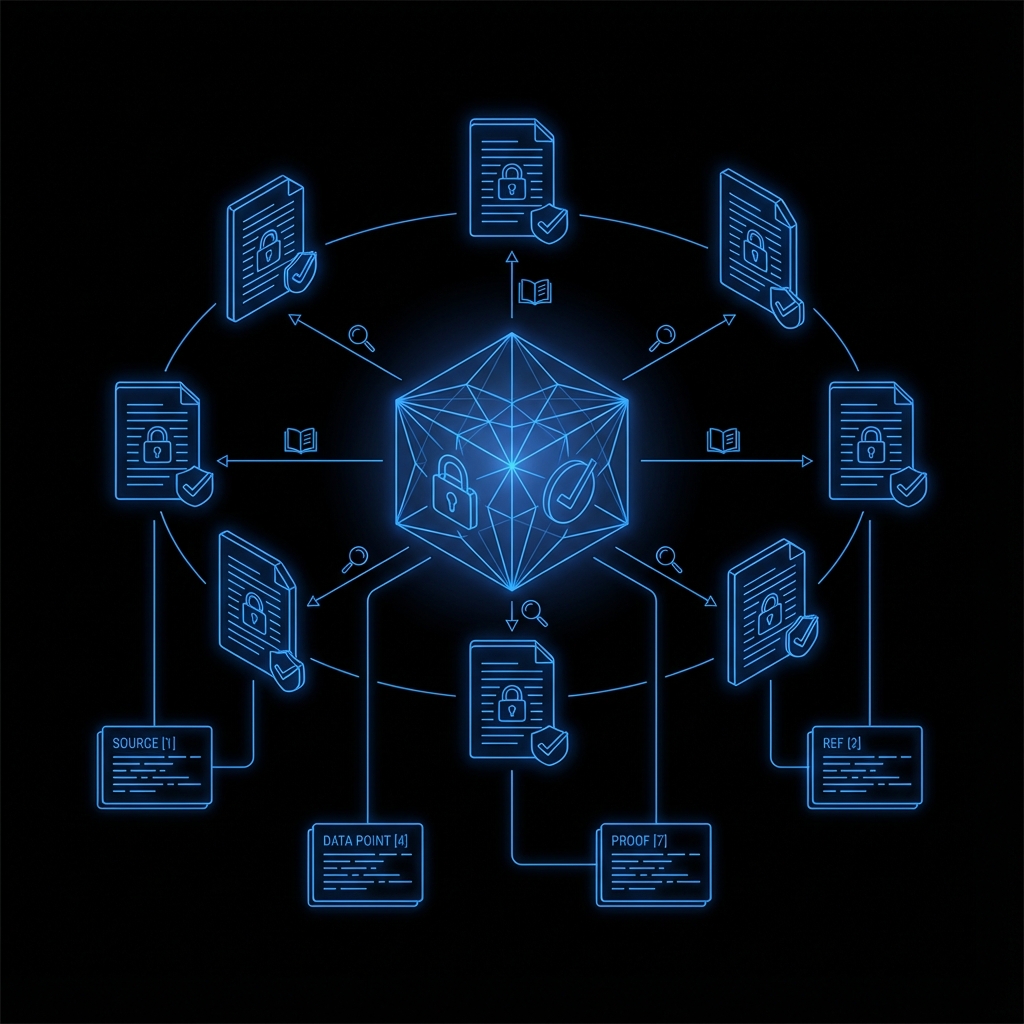

Every Claim Has a Source

Inline citations tie each statement to your documentation. Auditors can trace any claim back to the original policy.

Gaps Become Visible

When the system says "I don't have enough to answer this," you've discovered a documentation gap—before a learner or regulator does.

Your Documents, Your Boundaries

The AI generates from your uploaded corpus. It doesn't supplement with outside knowledge or general training data. The boundary is the feature.

Built for Compliance

Source-Grounded Retrieval

Content is generated from retrieved passages in your documents, not from the model's general knowledge. RAG built for compliance.

Confidence-Aware Output

The system knows when retrieval quality is weak. Instead of hallucinating, it flags uncertainty for human review.

Regulatory Context

Upload GDPR policies, RBI circulars, HIPAA procedures—the system recognizes compliance domains and applies appropriate structure.

Technical Depth

Works with dense material: API documentation, clinical protocols, engineering specs. Nuance is preserved, not flattened.

Flexible Delivery

SCORM packages for LMS compliance tracking. Embeddable HTML5 microsites for in-the-flow access. Your content, your channels.

Multimedia Integration

Deliver a rich learning experience with AI-narrated videos, interactive audio modules, and immersive visual storytelling—all generated automatically from your documentation.

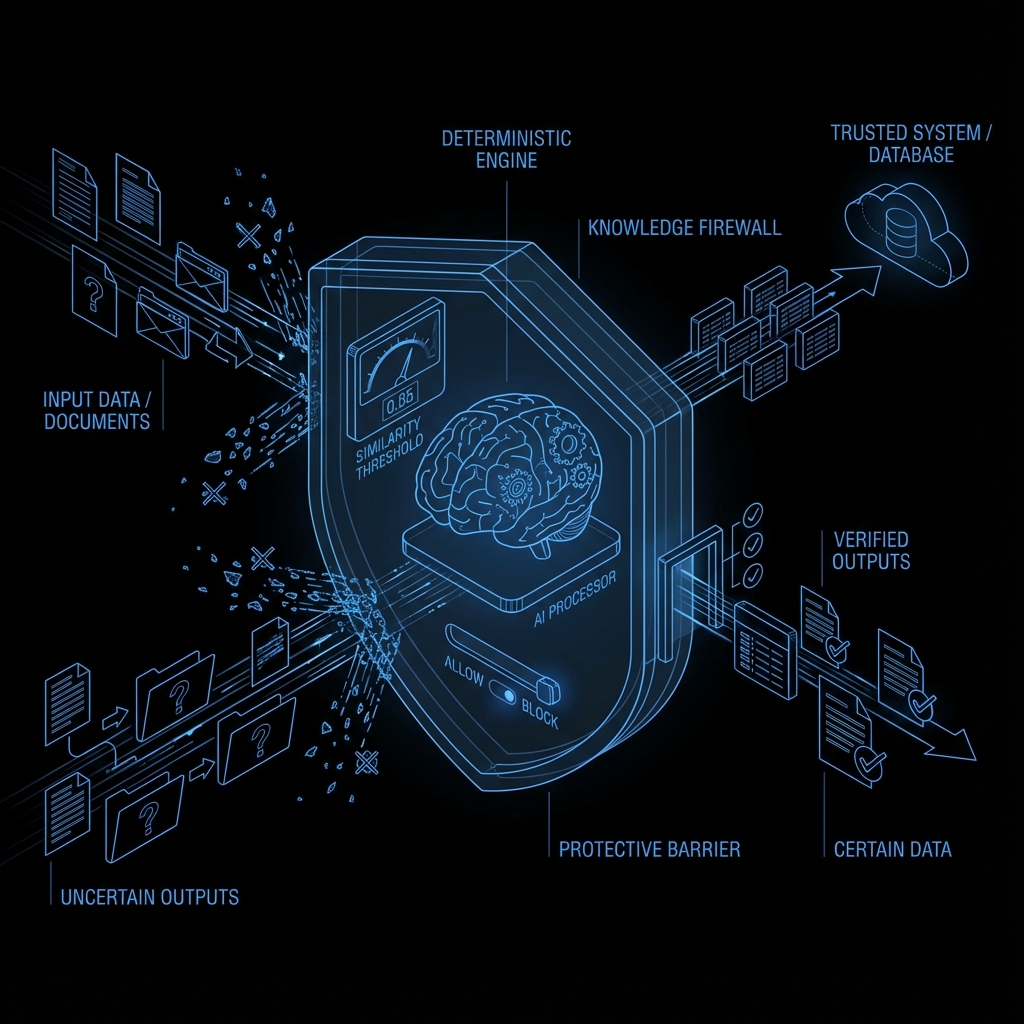

The Deterministic Engine

Unlike standard LLM deployments that rely on probabilistic guessing, Episteca uses a strict Retrieval-Augmented Generation (RAG) framework with a "Knowledge Firewall."

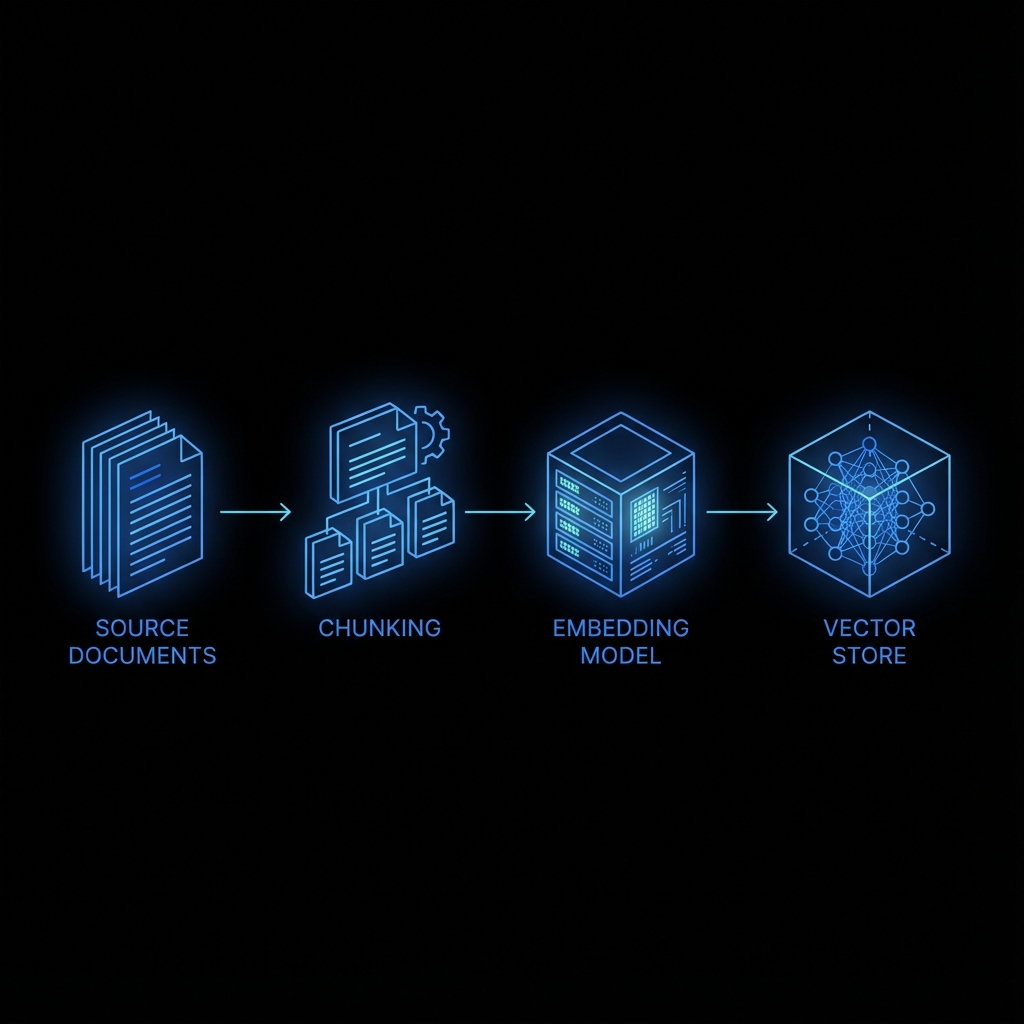

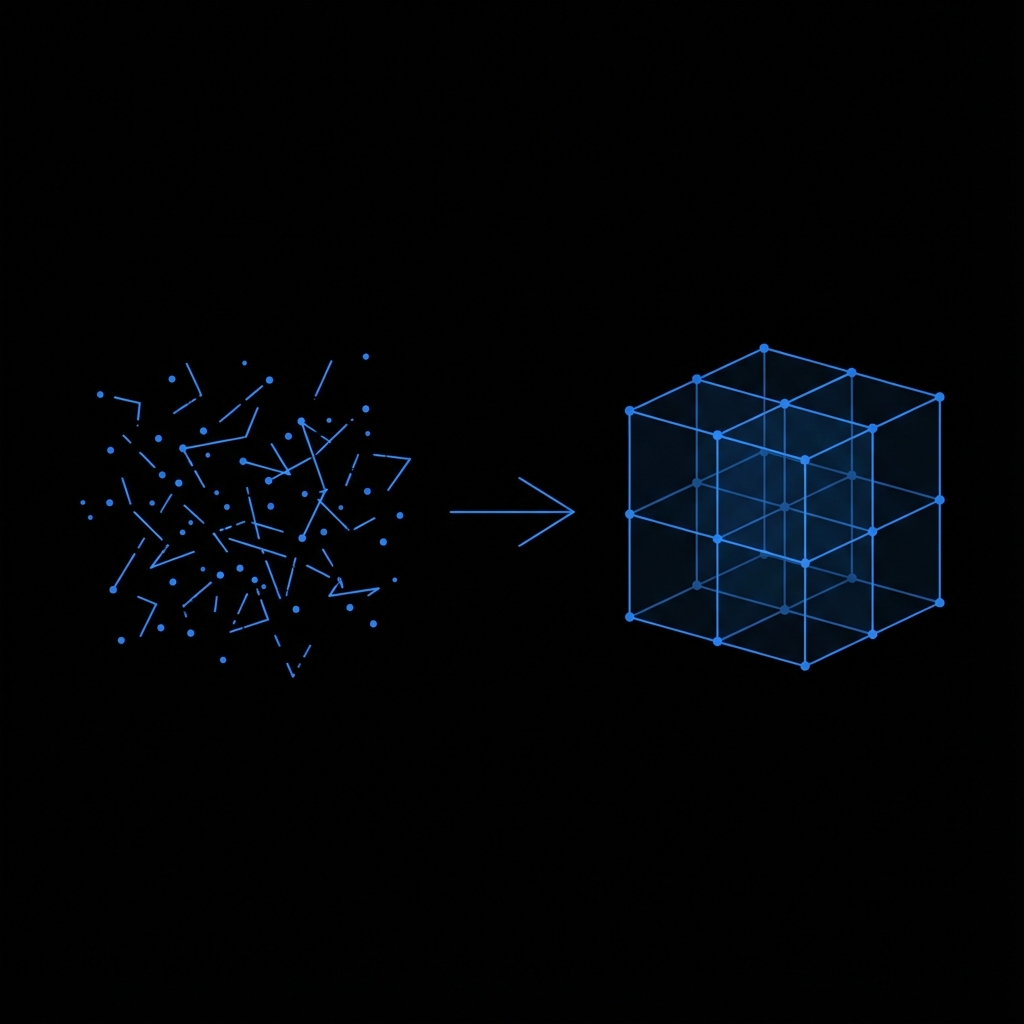

Vector Embeddings

Your documents are chunked and converted into 1536-dimensional vectors for semantic search.

Veracity Check

Every generation is cross-referenced against retrieval context. If similarity < 0.85, generation is blocked.

From Policy to Courseware

A seamless pipeline that transforms static PDF libraries into interactive, verifiable learning modules in minutes.

01. Ingest

Upload PDFs, SOPs, policy manuals, technical documentation. The system chunks, embeds, and indexes your content for retrieval.

02. Generate

Ask for a course module, assessment, or summary. The AI retrieves relevant source material and generates content grounded in those passages—with inline citations.

03. Enforce

Any output that lacks high-confidence source backing gets flagged. The system tells you what it doesn't know, so you can fill the gap or approve the limitation.

04. Deploy

Export as SCORM for your LMS, or embed directly in Slack, Teams, Notion, or SharePoint. Verified content, wherever your people work.

Solutions by Industry

Tailored models and retrieval pipelines for high-stakes industries where accuracy is mandated by law.

Finance & Banking

RBI circulars, SEC guidelines, internal risk policies—dense, high-stakes, constantly updated. Generate microlearning your teams will actually complete, with every claim traceable to the source regulation.

Healthcare & Pharma

Clinical terminology and procedural precision matter. Build training for trial protocols, patient data handling, and care procedures—grounded entirely in your approved documentation.

SaaS & Technology

Product docs change weekly. Upload release notes, API specs, and help center content. Regenerate training automatically, always in sync with your product. When the system flags gaps, you know your docs need updating too.

Why we built this.

Episteca is an American company from Cambridge, MA founded by MIT and Harvard alumni.

We spent years in media technology—immersive storytelling, AR/VR, social impact work. Consulting with enterprise clients, we kept hitting the same wall: organizations with deep institutional knowledge locked in documents nobody read, and no safe way to scale it.

Generic AI was fast but unreliable. Manual development was safe but slow. Compliance teams were stuck saying no to both.

So we built an AI that solves the actual problem: not "how do we generate content faster" but "how do we generate content we can stand behind." The answer was constraint. An engine that only says what it can cite, and admits when it can't.

That's Episteca. Knowledge you can prove.

Resources

VIEW_ALL_ARTICLESThe Architecture of Certainty

A deep dive into Retrieval-Augmented Generation (RAG) vs. Fine-Tuning for compliance use cases.

See the boundaries in action.

Upload a sample document. We'll show you what Episteca generates—and where it says "I don't know."

Your documents are processed in isolated environments and never used to train external models.